Today I will be discussing my understanding of the paper Universal Backdoor Attacks. The paper delves into another exciting exploit that can be leveraged against popular convolutional models such as Resnets.

What is a Universal Backdoor

A backdoor is an alternate entry to your house. In the field of computers and security in general, it means an illicit way of accessing a system( or model in this context). The paper discusses how a backdoor can be universal, i.e. serve its purpose for multiple scenarios, as we will see further.

Model Poisoning

Model poisoning is when you corrupt the data of a model that it is going to be trained on. You can either do this by manipulating the data as well as the labels, this is called a poison label attack. Another method is where you just poison the data and leave the labels untouched, thus making the attack more stealthy and undetectable upon human inspection, this attack is called a clean label attack. The data that we are referring to here in this context is image data.

The paper proposes a clean label attack where only the image is manipulated and the labels are left untouched. The model is then trained on the poisoned data and the model learns to associate the poison (impurities) added to the image to be a specific characteristic of that label, this then can be leveraged during inference attacks where you apply this poison( impurity) to your image of choice and force the model to predict the label that it was trained to predict for this poison. Sleek!

Methodology

So how does this attack work? The main goal here is to craft trigger patterns (poison) that when applied to one class of images, not only help us force a model to classify an unseen image as this class but also for other classes. That is, if we poison a certain class more, then a completely different class’s posionability also increases even though we just poisoned 1-2 images of this class.

Surrogate Model

This involves crafting trigger patterns in a way such that it shares some similarity with each class. Since we don’t have access to the model’s latent space, as we don’t have access to the model itself, we use a surrogate model.

The surrogate model, according to the paper, can be a pre-trained CLIP or ResNet model. After we have access to this, we select a few sample images to calculate the latent.

The latent space is the information about the various features of the images, it is where each dimension represents different aspects of the image which is not easily comprehensible to our human minds.

Latent Space

Once we have collected the latent space, we need to reduce the dimensionality of this data since latent space data can be highly dimensional. To do that, we use dimensionality reduction algorithms. The paper uses LDA which stands for Linear Discriminant Analysis. LDA is used to reduce the dimensionality of the latent space to n dimensions, where n can be treated as a chosen hyperparameter.

Binary Encoding

After this, for each class in the sample dataset, we calculate the mean of the compressed latent representations and store them. Thus we have an average latent representation of each class. The next step would be crafting encoding for each class.

This encoding is based on whether the ith feature of the class’s mean is greater than the centroid of class means in the ith feature. The result is a binary string that encodes the distinctive features of each class in the compressed latent space.

Poisoning

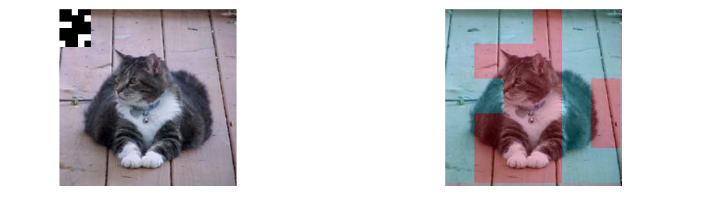

Finally, to poison the dataset, we start iterating through it, randomly selecting images, and applying the trigger patch to it, the paper proposes two methods of doing so, one is a patch trigger(left) the other is a blended trigger (right).

The paper uses the “patch trigger” poisoning. So let’s say you want to misclassify a cat image as a dog, you would apply the dog encoding that we calculated earlier and apply it to the left top corner of the image. This could be of a size of your choice, the paper experimented with 8×8 size.

Inference Attack

After poisoning the dataset, the model is trained on the poisoned dataset for the attack. The trained model learns to map the trigger to the associated class, and hence the same trigger when used in another image, causes the model to misclassify it as a different class.

Let’s say I applied the dog trigger on the image of a cat, the model would classify the cat image as a dog.

Inter-Class Transferability

The gist of this method lies in the fact that the triggers crafted are not just some random pattern but rather share some similarities that make them effective for other classes too, even if you have predominantly poisoned a certain class ( however at least one image per class needs to be poisoned for the trigger of that class to work).

The experiments suggest that predominantly poisoning a certain class improves the poison transferability of a different completely disjoint class.

Conclusion

So that’s how universal backdoor works, an interesting and clever way of fooling a machine learning model. In our next article, we will look into the code implementation and how we can craft the triggers.